Approx 1,800 words, 5-8 mins

The book is described on the cover, by Malcolm Gladwell, the author of several pop-science/psychology books, as:

“Dazzling … the most brilliant book on business, society and everyday life that I’ve read in years”.

That’s a mammoth overstatement. However, the book does has merit, as we shall discuss.

What is the Wisdom of Crowds?

Surowiecki remarks that to function properly, “collective intelligence” must satisfy four conditions:

- Diversity: Each person must have private information. Even if this is simply an eccentric interpretation of the public facts.

- Independence: A person’s opinion is unaffected by the opinions of others.

- Decentralisation: People can specialise and draw on local knowledge.

- Aggregation: Some mechanism exists to turn private judgements into a collective decision.

If a group of people making a decision is large enough, their errors should cancel out. Information and error are the properties of each individual decision or judgment, so once the errors are cancelled out, you are left with information. That information can then be aggregated and thus produce a collective decision. This collective decision, if these criteria are satisfied should be correct or at least approximate correct.

Why did I buy the book?

I am a voracious reader of anything related to economics, psychology and decision-making. The Wisdom of Crowds in many ways placates all three of those preferences. The book was particularly appealing because, as a business, we have individual consultants that produce reports based only on private information. I recognised a long time ago how much better could some of our more complex or sensitive judgements be if we could summon the collective wisdom of everyone, rather than simply ‘trying our best, maybe asking a colleague for help, and then relying upon QC to pick up on any problems’.

However, before I started working out a practical way to tackle this, I first needed to fully understand the problem, and the evidence-base for the four conditions of the solution (which, luckily, is methodologically repeatable in an Arbtech context).

Can people figure something out without talking to each other?

The book labours a variety of examples where experts, even panels of them, fail to predict events or measures with as much accuracy as the aggregation of a diverse group of laypeople. There are literally tens of examples in the book. But more interesting, is the (surprisingly commonplace) ability of groups of people to arrive at an optimal solution, without ever having communicated among themselves.

Surowiecki cites the example of a study undertaken by Norman Johnson, a theoretical physicist at the Los Alamos National Laboratory, New Mexico*. Johnson was intrigued by the possibility that groups may be able to solve problems, without the individuals in that group interacting with one another.

*Entirely off topic, but New Mexico is an absolute riot. If it isn’t on your list of places to visit, it should be. Anyway…

Johnson’s experiment involved pitching people against one another in a maze. The first time, people wandered around aimlessly, so some, by chance, turned the correct way at a node (left/right decision), and others did not. This produced random results. The second time, participants could re-negotiate the maze, obviously though this time, using the information they gleaned from the first attempt. On average, they took 34 steps to exit the maze the first time, and 12 steps the second time. But something more interesting happened…

Group intelligence

Johnson took the majority decisions at each node—so if most people took a left rather than a right, he noted that down—and worked out the number of steps required to exit the maze. It was 9. Computer simulations later confirmed that this the fastest route out of the maze. There is no possible route with fewer than 9 steps. This, he calls the groups’ “collective solution”. Not only was this path shorter than the average (12 steps), it was better than any one individual’s steps (expertise/luck). Again, it’s worth pointing out that individuals had no interaction between themselves while negotiating the maze. This is of course an academic exercise, but there are many example s of this collective solution translating into real life.

Surowiecki talks about e.g. 100 people solving a problem and the “average answer often being at least as good as the answer of the smartest member”. Make of that what you will. It’s an easy statement to pick apart; chiefly, what does he mean by smartest person? The best equipped to solve that problem? The most “expert”, howsoever you wish to define that term?

Related to that, one criticism I have with the book, is that while I agree with Surowiecki’s sentiments throughout, I do feel his choice of terminology/nomenclature/vocabulary is often misguided—to appeal to a wider audience he has compromised his ability to convince his more astute and discriminating readers. But that is a minor flaw, and one I can appreciate as an author he has probably taken as a deliberate decision, as opposed to merely being sloppy.

Experts

There are of course many modern, real-world examples of experts doing a fine job of prediction.

Nate Silver of FiveThirtyEight fame (and author of The Signal and the Noise—an outstanding book that I will review soon) gets it right. A lot. But, he uses a statistical analysis of public and private (diverse, independent, decentralised) datasets (aggregated) using a proprietary system (software). So basically, he’s doing what the book tells us to do.

In other walks of life, though, there are true experts. These are people that cannot be beaten (in terms of the quality of decision) by sheer numbers. Here’s two examples:

A chess grandmaster. No amount of aggregating peoples’ judgements—especially if all those people have is an esoteric twist on some public information—into a single decision (move) is likely to beat Gary Kasparov in his prime.

Side note: Even IBM’s Deep Blue supercomputer, capable of analysing a staggering 200 million positions per second, had a hard time. In fact, it lost the first 6 game series (1996) 4 – 2, and only won the second (1997) 3½ – 2½.

A Royal Marines Commando. Again, experience counts. Under the pressure and stress of battle, in real time, when the lead is flying on a two-way range, there is almost no chance that a collective decision from laypersons would outperform a veteran of war.

In these domains*, and I am sure there are countless others—complex surgical procedures spring to mind, mastery is everything.

*I am a big fan of the concept of “domains” and it’s something I will opine on in a separate blog, as they are ruthlessly enforced at Arbtech, in my view, to the mutual and substantial benefit of literally everyone.

It is interesting to note that studies undertaken in the 1970s (Simon & Chase) demonstrated that mastery was distinct from intelligence i.e., any true expertise is “spectacularly narrow” and doesn’t mean that person can translate their skill well to other problems and tasks. This is obvious; don’t ask a chess player to command a platoon under fire and expect to triumph. But the subtler implication is that there are no measurable attributes to assess someone’s decision-making, in a general sense, and thus, one cannot become expert at it. (If there was such a thing as an expert decision-maker, the psychologist, Daniel Kahneman, Nobel laureate in economics and author if the 2012 book; Thinking Fast and Slow, probably is as close as it gets).

Here, in the domain of generalised decision-making, the collective reigns supreme, given the right environment (the four criteria set out above). If a collective solution can be arrived at without risking homogeneity and groupthink—and therefore exploiting as much tacit knowledge as possible—it will almost always outperform.

How have I put this to work at Arbtech?

Decisions for ecologists are often simple; fun even. But, sometimes, numbingly complex. The nature (pun!) of the way ecological systems fail is cascading. This makes ostensibly unpretentious decisions, such as the habitat value classification of a patch of grass for reptiles on a spectrum that runs from “substantive” to “sweet FA”, compounding and important—both for the species (impact) and client (downstream consequences).

Working on cognition problems, of any sort, is normally extremely demanding, both in time and on resources. Hence, my principle goal in facilitating our consultants making better on and off-site decisions, faster, was to build a tool that required almost no investment in time or resources from the person with the problem, and even less from the people who muck-in to help that person out.

Enter Andy

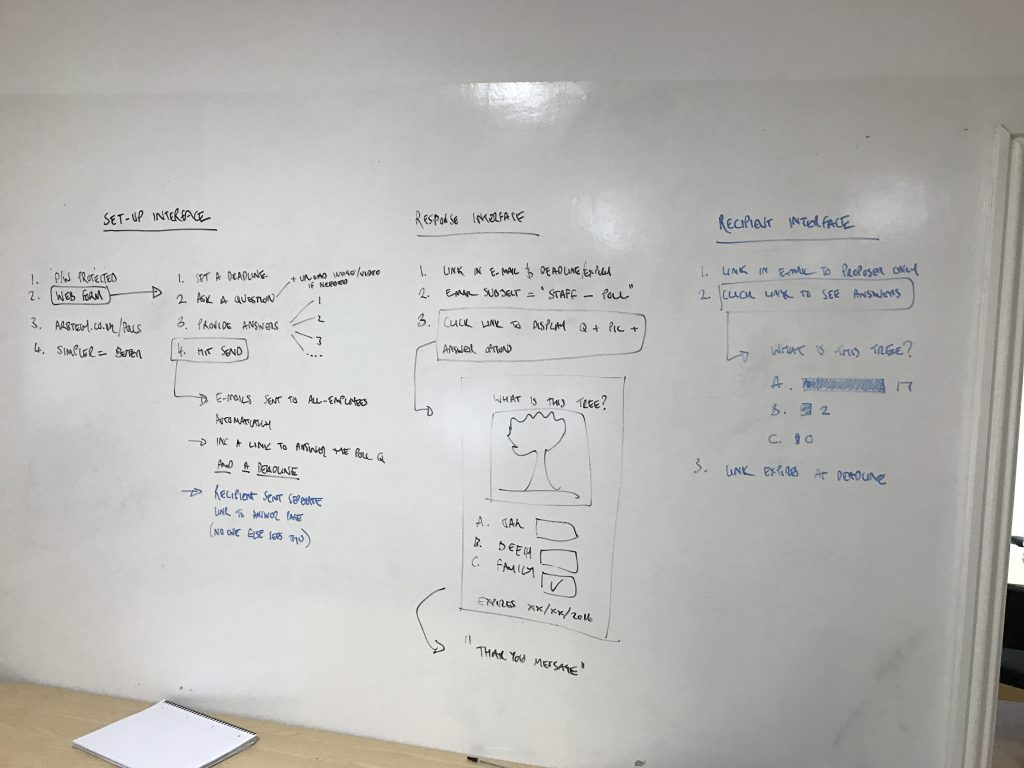

I hashed out the following on our HQ whiteboard wall, and Andy Ward, our computer wiz, did the rest:

Thanks to Andy’s brilliance, we now have a polling tool hosted online where our consultants, management and admin staff can get help and contribute to each other’s problems. It goes like this:

- Write a question (e.g. “Is this good reptile habitat?”), and choose between setting up multiple choice answers (e.g. high/moderate/low), or allowing open ended answers.

- Upload a picture or video (e.g. of potential reptile habitat).

- Choose a deadline for responses (you need a response in time to write your report!).

- Hit send.

- It now goes automatically to all Arbtechers, and arrives as a one line e-mail with a link to respond to the poll.

- The responders click the link, view the question and any picture/video, and select their answer. They cannot see the results or any other information, to preserve independence and diversity.

- The poller can then see the results live, or after the deadline expires, which are completely anonymised, again to preserve independence and diversity.

- The poller now has now a “collective solution” using which, s/he can inform the decision.

Continuing our reptile habitat example: Ultimately, knowing that 3/15 people answered “low” habitat value, 7/15 went with “moderate”, and 5/15 said “high”, doesn’t give you the answer. However, it does show you that if you thought “low”, while you might be right, it’s not the consensus view, and you ought to re-examine your site notes and photographs before making that call.

Conclusion

The book is great. It could probably be summarised on a side of A4, but that should not be taken as a reflection of the value of its core message. I have effected a change in process at Arbtech to allow us to capture the “wisdom of crowds”, and hopefully will reap the benefits for many years to come. For £9.99, and a few hours reading, that’s not a bad ROI.

I hope you enjoyed this review. Please let me know what you think, and feel free to share it.

Best wishes,

-R.

References:

Surowiecki, J. (2005). The Wisdom of Crowds: Why the Many Are Smarter Than the Few and How Collective Wisdom Shapes Business, Economies, Societies and Nations. New York: Knopf Doubleday Publishing Group.

There are no comments yet. Why not get involved?